Will the Future Be Silent for Some?

Since this article was first written, Alexa has introduced a response acknowledging when a room is too noisy. However, while this may seem like an improvement, it ultimately does not solve the core issue — Alexa still struggles with execution. This update serves more as a buffer to reduce frustration rather than a meaningful step toward inclusivity. The concerns explored in this article remain just as relevant today.

The Growing Role of Voice Interfaces in AI

As Artificial Intelligence (AI) continues its rapid evolution, the world is moving closer to a future dominated by Voice User Interfaces (VUIs) — where speaking to technology is not just an option, but the primary mode of interaction.

From smart home assistants to health-monitoring applications, AI-powered VUIs are becoming so deeply embedded in daily life that one could argue modern existence is now intertwined with machine cognition.

Already, major tech giants — including Amazon (Alexa), Apple (Siri), Microsoft (Cortana), and Google (Google Assistant) — have built voice recognition systems that serve as virtual assistants, streamlining tasks, answering queries, and even facilitating accessibility. As of today, there are over 4.2 billion voice interface users worldwide.

But with this increasing reliance on VUIs, a critical question arises:

Were these devices truly designed for everyone?

What usability factors were considered during their creation?

And as AI advances, what new design improvements should we prioritize to ensure inclusivity?

To explore these questions, this article will analyze Amazon’s Alexa through the lens of human factors engineering — specifically using Gerhardt-Powals’ cognitive engineering principles as a benchmark. By applying these principles, we can assess Alexa’s current usability and identify areas where VUI design can evolve to be more adaptive, equitable, and accessible to all users.

Morgan and the Case of The Eclectic Gathering

The holiday season brings people together — sometimes in crowded, lively spaces filled with overlapping conversations, clinking glasses, and music pulsing in the background.

At a recent gathering in Washington, D.C., my friend Morgan, a boisterous Texan with a flair for hosting, found herself in an unexpected battle — with her own smart home assistant.

As the evening carried on and the music grew too loud, Morgan decided to use Alexa — Jeff Bezos’ Star Trek-inspired voice-recognition dream made real — to lower the volume. She called out a command. Alexa didn’t respond.

With a half-hearted chuckle, she tried again. No response.

The room quieted slightly as she raised her voice, but just as she spoke again, a male guest cut in mid-sentence, breaking her rhythm. Alexa remained silent.

Growing frustrated, Morgan shushed the room, squared her stance, and shouted Alexa’s name in a firmer, more authoritative tone. Yet, the device still refused to acknowledge her.

Then, John, a British male guest, calmly issued the same command.

Alexa responded immediately.

The room erupted into laughter, but beneath the humor, a troubling question lingered: Why did Alexa recognize John but not Morgan?

What followed next wasn’t so funny. Flippant remarks flew across the room:

“Guess you need a man’s voice to get her attention.”

“Maybe Alexa only listens to Brits.”

“She’s biased.”

Was this just a glitch, or was there a deeper design flaw at play?

When Design Reflects Human Bias

This moment may have been brushed off as party humor, but it underscores a fundamental issue in voice interface design — one that aligns with Gerhardt-Powals’ cognitive engineering principles and Jakob Nielsen’s usability heuristics.

In his article “Voice Interfaces: Assessing the Potential,” Nielsen critiques VUIs, stating:

“Visual interfaces are inherently superior to auditory interfaces for many tasks. The Star Trek fantasy of speaking to your computer is not the most fruitful path to usable systems.”

While Nielsen acknowledges the future of multimodal interactions (combining voice and visuals), Alexa’s current design shortcomings reveal the risks of relying solely on auditory input.

Alexa failed to reduce uncertainty — a core principle of human factors design.

She provided no feedback on why she wasn’t responding.

The device’s training data likely favored certain voices over others, leading to a biased user experience.

This issue goes beyond technical malfunctions — it reflects a broader challenge in AI development:

Who are these devices optimized for?

Whose voices do they recognize first?

As I reflected on this moment, I recalled a discussion from Frank Chimero’s Shape of Design, where he describes design as life-enhancing — a tool to make things better. Yet, Sidney Dekker’s New View of Human Error warns that when technology fails, the issue isn’t the user — it’s the system that was built.

Morgan didn’t do anything wrong. The failure was Alexa’s inability to process her voice accurately in a real-world setting. Instead of assuming the user should adjust their voice to fit the system, we should be asking:

How was Alexa designed?

What voices were prioritized during development?

And what biases were unintentionally built into her system?

The answer lies in who creates, trains, and refines these models — and whether the evolutionary nature of design is embraced to correct these flaws.

Humans Are Flawed, That Makes Us

Gerhardt-Powals’ cognitive engineering principles emphasize situational awareness, ensuring both humans and systems operate with clarity, responsiveness, and adaptability.

Yet, when it comes to Voice User Interfaces (VUIs), most design decisions still favor default usability over true adaptability. This is evident in the way Alexa failed Morgan — not because she spoke incorrectly, but because Alexa’s recognition model failed in a real-world scenario.

Two key cognitive engineering principles highlight these failures:

Reduce Uncertainty — Systems should provide clear, responsive feedback instead of leaving users frustrated.

Present New Information with Meaningful Aids to Interpretation — Information should be conveyed in familiar, intuitive ways so users can easily understand system limitations.

At the party, Alexa didn’t just fail to recognize Morgan’s voice — it failed to provide any signal that it was struggling. This moment exposed a fundamental design flaw:

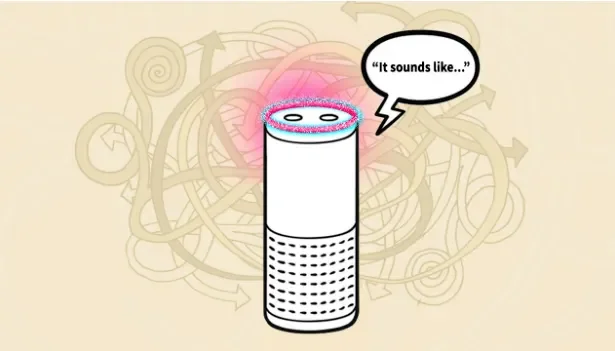

What If Alexa Communicated Its Struggles?

Instead of forcing Morgan into a trial-and-error guessing game, Alexa could have:

Used its LED halo light to signal confusion (e.g., flashing a red and white pattern to indicate poor audio clarity).

Verbalized its limitation in real-time:

“It sounds like the room is noisy. I’m having trouble understanding. Can you try again?”

Adapted dynamically by enhancing its sensitivity to familiar voices over generic sound input.

These small but critical refinements would align Alexa more closely with Gerhardt-Powals’ principles, reducing friction while keeping users informed.

The Misconception of AI “Intelligence”

Part of the frustration with AI-powered assistants stems from our expectations vs. reality.

There’s a cultural perception that AI is inching toward true intelligence — a fear reinforced by decades of sci-fi (HAL9000, AUTO, and other malevolent AI archetypes) and doomsday scenarios about sentient machines.

But the truth is, Alexa isn’t smart — she’s just a highly advanced tool.

AI today operates on narrow intelligence (weak AI), meaning it:

Learns from patterns but doesn’t understand intent.

Processes voice commands but doesn’t adapt contextually.

Fails in unpredictable environments, just like at Morgan’s party.

This aligns with Professor Ragnar Fjelland’s argument in Why General Artificial Intelligence Will Not Be Realized. He draws from Dreyfus and Dreyfus’ theories, emphasizing that true intelligence requires physicality, cultural learning, and trial-and-error experiences.

In other words, a machine that has no body, no lived experience, and no cultural practice cannot truly “learn” like a human.

Which brings us back to Alexa’s design flaw — if AI cannot learn like humans, then why are we expecting it to act human?

The real design challenge isn’t making AI smarter — it’s making AI clearer.

Designing Alexa for Real-World Imperfections

The biggest issue with Alexa’s failure at the party wasn’t the error itself — it was the lack of feedback.

This is where the halo light feature becomes a missed opportunity in current VUI design.

Alexa’s halo ring already has multiple response settings, but they are underutilized in real-world error handling.

Imagine if, during Morgan’s party, Alexa:

Recognized audio overload and flashed a warning color.

Spoke back to the user with a clear explanation of what was happening.

Allowed real-time tuning to prioritize the primary user’s voice over background noise.

This is not a complex fix. It’s a UX and engineering refinement that aligns with cognitive engineering principles while enhancing user trust in AI.

Final Thoughts: AI Isn’t Flawed — Its Design Is

The issue with AI-powered assistants isn’t that they aren’t intelligent enough — it’s that their design prioritizes default usability over real-world adaptability.

If we apply Gerhardt-Powals’ principles correctly, Alexa should:

Provide better feedback on recognition failures.

Use visual and verbal cues to communicate limitations.

Prioritize adaptive, human-centered learning over rigid training data.

Because at the end of the day, technology isn’t meant to be perfect.

Humans are flawed — that makes us.

And if we want AI to work for us, it has to be designed to work with our imperfections, not against them.

Beyond VUI — Addressing the Bias in Alexa’s Design

Gerhardt-Powals’ cognitive engineering principles offer multiple pathways for improving Alexa’s usability, but they fail to account for systemic biases in AI-driven voice recognition.

Implicit bias is not always a result of malicious design — often, it emerges from the absence of diverse input during the development process. AI, after all, only learns what it is trained on.

Just today, I experienced the same frustration that Morgan did at her party. While playing music, I asked Alexa to change the song. Instead of complying, she defaulted — seven times — to Kate Bush, an artist I frequently listen to on Spotify. It was as if Alexa had decided that, rather than deciphering my request, she would revert to an assumed preference.

Then, my husband Greg — white, US-born, with a deeper voice — gave the same command.

Alexa responded immediately.

We were both standing in the same room, at the same distance from the device. Yet, she recognized him, not me.

Why?

Gerhardt-Powals’ Principle: Reduce Uncertainty

One of Gerhardt-Powals’ core principles is Reducing Uncertainty — meaning that a system should present clear, obvious responses that minimize error and confusion.

Alexa’s failure to recognize my request introduced uncertainty into the interaction.

Instead of adapting or clarifying her difficulty, she defaulted to a predetermined fallback (Kate Bush) rather than prompting me for clarification.

When Greg spoke, Alexa processed his voice accurately and immediately, reinforcing the bias baked into her training data.

Gerhardt-Powals’ Principle: Automate Unwanted Workload

Another key principle states that AI should reduce user effort by intelligently handling problems without manual intervention.

Instead of forcing me to repeat myself seven times, Alexa should have recognized repeated failed attempts as an error signal.

A well-designed AI would have adjusted dynamically, either by prompting me for a clearer command or by increasing its sensitivity to my voice based on previous interactions.

If Alexa had been designed with this principle in mind, I wouldn’t have had to do the extra work of debugging her failure manually.

Bias Is a Design Flaw, Not an Inevitable Limitation

At its core, Alexa’s failure here is not just a bug — it’s a fundamental design flaw. If Gerhardt-Powals’ principles were fully applied, Alexa’s voice recognition system would:

Reduce errors in recognizing diverse speech patterns.

Adapt dynamically to users rather than defaulting to assumptions.

Prioritize personalized voice recognition instead of favoring dominant demographic groups.

Yet, because these principles were not fully integrated into VUI design, the result is a system that functions better for some voices than others.

And when AI systematically fails to recognize certain people, it effectively erases them from the conversation.

Implicit Bias in AI — and the Voices Left Out

Implicit bias is when we have a preference for (or aversion to) a person or group, rather than remaining neutral. We all carry unconscious attitudes and stereotypes, often without realizing it.

I’m not saying that people intentionally design AI to discriminate — I sincerely hope we are past the era of systemically excluding voices on purpose. But when tech development remains dominated by a single race, gender, or perspective, bias creeps in unnoticed.

And for those of us who stutter, voice technology is more than just a convenience — it’s a daily hurdle.

For the first time in my life, stuttering has entered public consciousness in a meaningful way. Seeing a prominent public figure openly discuss their speech disability was both empowering and emotional for me. I remember the frustration and sadness when people mocked speech impairments, yet instead of defensiveness, the conversation shifted toward awareness, empathy, and understanding.

That’s exactly the mindset we need in AI and voice technology.

Because as we move forward into a voice-activated world, where conversation is becoming our newest digital interface, we need to ask:

Are we designing for all voices — or just the ones AI has been trained to recognize?

1 in 100 people stutter, yet voice assistants struggle to accommodate us. How will AI developers tackle one of the oldest human interfaces — speech — when billions of dollars and the future of accessibility are on the line?

I, for one, want my voice to be heard.